Table Partitioning In MySQL NDB Cluster and What’s New (Part I)

This blog is about table partitioning in NDB Cluster. We will see how this feature has been enhanced from version to version. Also we will see which partitioning user should use under which scenario. Here I will assume that the user has some knowledge on NDB cluster.

Data distribution and table partitioning are usually coupled together. In NDB, when we talk about table partitioning, we mean ‘data distribution’ mainly as NDB doesn’t fully support RANGE, HASH or LIST partitioning. Most of the enhancements made to partitioning over the years are about ‘data distribution’ rather than supporting various partitioning schemes. The main goals of data distribution are:

NDB Cluster is a distributed database system so when a user creates a table, by default, the table data will be partitioned automatically even if no partition scheme is mentioned during the table creation. By default, a table is partitioned based on the primary key. If no primary key is mentioned during table creation then NDB will create a hidden primary key and partition the table based on this hidden key.

MySQL Server supports many partition schemes like range partitioning, list partitioning, hash partitioning, key partitioning but NDB cluster fully supports only one partition scheme i.e. key partitioning (PARTITION BY KEY). A table can be partitioned by one or more columns from its primary key. By default the number of partitions to use is calculated based on the number of data nodes in the cluster multiplied by the number of LDM (local data manager) threads on a single data node. This default gives a good balance of data (and associated memory, storage, cpu and network usages) across the system.

The LDM threads play a key role in table partitioning as these are the components which actually store and retrieve table data. All access to a particular table partition is managed by a single LDM thread, with separate LDM threads managing separate table partitions independently. The number of LDM threads created in a data node can be set explicitly through configuration variables - either by MaxNoOfExecutionThreads or ThreadConfig. Any change to the number of LDM threads normally requires an IS (Initial cluster system restart) to take effect.

Lets look at how table partitioning has evolved from older NDB versions like 7.4 to latest release 8.0.23 thru an example.

Here we have not specified explicitly how many LDMs we need i.e a default selection, we have not chosen from the configuration variable MaxNoOfExecutionThreads. For default setting or upto value ‘3’, the number of LDMs is set to 1. So in a cluster having 4 data nodes with one ldm, the total number of table partition would be #ldm * #data nodes = 4 * 1 = 4.

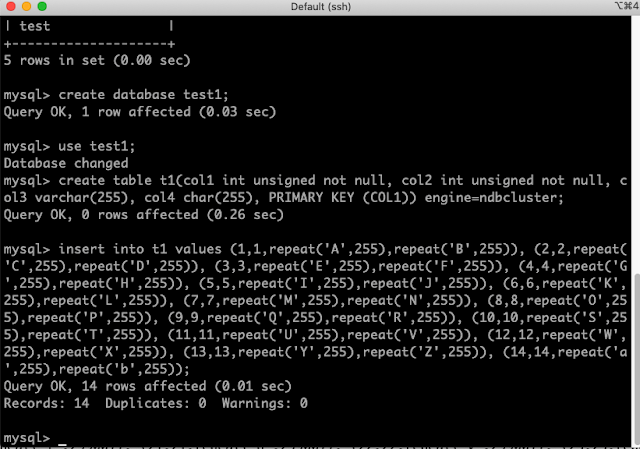

Lets take a look by creating a table, inserting a few rows into it and checking the number of partition thru ndb tool (ndb_desc):

Lets look at the partition count thru NDB API (bin/ndb_desc):

From the above image, one can see that FragmentCount: 4, and this is with the default setting. Each fragment/partition has 2 replicas, and so is stored on 2 nodes and that all nodes have 2 fragment/partition replicas of this table – e.g. the distribution is balanced.

Lets set the MaxNoOfExecutionThreads to ‘8’ which means we set the number of LDMs as 4. For details about mapping of MaxNoOfExecutionThreads to each threads type, please refer to the manual here.

Again create the same table 't1' and insert few rows into it. Check the partition count from the bin/ndb_desc API tool.

From the above image, we can see that FragmentCount: 16 i.e. #ldms * #data_nodes = 4 * 4 = 16. The fragments are balanced across the nodes.

This is with ndbmtd (multithreaded ndbd), lets check the same with a single thread ndbd. Lets verify if there is any differences.

From the above image, one can see that FragmentCount: 4, even if we set MaxNoOfExecutionThreads to ‘8’. The reason is when ndbd is used on the data node then number of LDMs is always set to ‘1’, so total number of partition is #data_nodes * #ldms = 4 * 1 = 4.

The above is automatic way of table partitioning, user can also do table partitioning explicitly while creating table through PARTITION BY KEY(column names) [PARTITIONS "how_many"] clause.

NDB supports PARTITION BY KEY (<column names>). All columns used for partitioning must be part of the primary key. When no PARTITION BY clause is given, all primary key columns are used. By using PARTITION BY clause, partition will occur based on a subset of primary key columns that is useful for controlling which rows go to which partition and is normally or best used without also specifying the number of partitions.

Lets go back to our multithreaded ndbd (ndbmtd) case, we will create the same table ‘t1’ again and inserts few rows into it. Then will check the partition count. The only difference this time is that, we will create the table with PARTITION BY KEY with PARTITIONS clause.

create table t1(col1 int unsigned not null, col2 int unsigned not null, col3 varchar(255), col4 char(255), PRIMARY KEY (col1)) engine=ndbcluster PARTITION BY KEY(col1) PARTITIONS 8;

Case -1:

We will partition with lesser number (8) than the automatic partition count (i.e. 16)

Lets check the partition count from ndb_desc:

From the below image we can see that table has been partition into 8.

Case -2:

We will try to partition the table with more than (20) the automatic partition count (16).

From the above image we can see that table has been partition into 20. So from the above two cases, we can see that when explicitly partition is used, partition of table is completely based on the PARTITION number rather than the automatic way of partition. Now the obvious question is which one to choose i.e. the automatic way of partitioning or explicit way of partitioning? It is highly recommanded to use the automatic partition than the explicit way of partitioning in most of the cases.

This concludes the first part of table partitioning.

Data distribution and table partitioning are usually coupled together. In NDB, when we talk about table partitioning, we mean ‘data distribution’ mainly as NDB doesn’t fully support RANGE, HASH or LIST partitioning. Most of the enhancements made to partitioning over the years are about ‘data distribution’ rather than supporting various partitioning schemes. The main goals of data distribution are:

- Balance: Avoid premature bottlenecks of memory, storage, cpu or network

- Scaling: Make use of all resources, add capacity with new resources

- Efficiency: Locality of access and minimal unnecessary data transfer

NDB Cluster version (e.g. 7.4.32, 7.5.21, 8.0.23)

1 Management server

4 Data nodes servers (ndbd, ndbmtd)

1 Mysqld server

4 API nodes

NoOfReplicas = 2

NDB Cluster is a distributed database system so when a user creates a table, by default, the table data will be partitioned automatically even if no partition scheme is mentioned during the table creation. By default, a table is partitioned based on the primary key. If no primary key is mentioned during table creation then NDB will create a hidden primary key and partition the table based on this hidden key.

MySQL Server supports many partition schemes like range partitioning, list partitioning, hash partitioning, key partitioning but NDB cluster fully supports only one partition scheme i.e. key partitioning (PARTITION BY KEY). A table can be partitioned by one or more columns from its primary key. By default the number of partitions to use is calculated based on the number of data nodes in the cluster multiplied by the number of LDM (local data manager) threads on a single data node. This default gives a good balance of data (and associated memory, storage, cpu and network usages) across the system.

The LDM threads play a key role in table partitioning as these are the components which actually store and retrieve table data. All access to a particular table partition is managed by a single LDM thread, with separate LDM threads managing separate table partitions independently. The number of LDM threads created in a data node can be set explicitly through configuration variables - either by MaxNoOfExecutionThreads or ThreadConfig. Any change to the number of LDM threads normally requires an IS (Initial cluster system restart) to take effect.

Lets look at how table partitioning has evolved from older NDB versions like 7.4 to latest release 8.0.23 thru an example.

Lets have a cluster running on 7.4 NDB version.

Lets look at the configuration file (shown below as part of it):

Here we have not specified explicitly how many LDMs we need i.e a default selection, we have not chosen from the configuration variable MaxNoOfExecutionThreads. For default setting or upto value ‘3’, the number of LDMs is set to 1. So in a cluster having 4 data nodes with one ldm, the total number of table partition would be #ldm * #data nodes = 4 * 1 = 4.

Lets take a look by creating a table, inserting a few rows into it and checking the number of partition thru ndb tool (ndb_desc):

Lets look at the partition count thru NDB API (bin/ndb_desc):

From the above image, one can see that FragmentCount: 4, and this is with the default setting. Each fragment/partition has 2 replicas, and so is stored on 2 nodes and that all nodes have 2 fragment/partition replicas of this table – e.g. the distribution is balanced.

Lets set the MaxNoOfExecutionThreads to ‘8’ which means we set the number of LDMs as 4. For details about mapping of MaxNoOfExecutionThreads to each threads type, please refer to the manual here.

Again create the same table 't1' and insert few rows into it. Check the partition count from the bin/ndb_desc API tool.

From the above image, we can see that FragmentCount: 16 i.e. #ldms * #data_nodes = 4 * 4 = 16. The fragments are balanced across the nodes.

This is with ndbmtd (multithreaded ndbd), lets check the same with a single thread ndbd. Lets verify if there is any differences.

From the above image, one can see that FragmentCount: 4, even if we set MaxNoOfExecutionThreads to ‘8’. The reason is when ndbd is used on the data node then number of LDMs is always set to ‘1’, so total number of partition is #data_nodes * #ldms = 4 * 1 = 4.

The above is automatic way of table partitioning, user can also do table partitioning explicitly while creating table through PARTITION BY KEY(column names) [PARTITIONS "how_many"] clause.

NDB supports PARTITION BY KEY (<column names>). All columns used for partitioning must be part of the primary key. When no PARTITION BY clause is given, all primary key columns are used. By using PARTITION BY clause, partition will occur based on a subset of primary key columns that is useful for controlling which rows go to which partition and is normally or best used without also specifying the number of partitions.

Lets go back to our multithreaded ndbd (ndbmtd) case, we will create the same table ‘t1’ again and inserts few rows into it. Then will check the partition count. The only difference this time is that, we will create the table with PARTITION BY KEY with PARTITIONS clause.

create table t1(col1 int unsigned not null, col2 int unsigned not null, col3 varchar(255), col4 char(255), PRIMARY KEY (col1)) engine=ndbcluster PARTITION BY KEY(col1) PARTITIONS 8;

Case -1:

We will partition with lesser number (8) than the automatic partition count (i.e. 16)

Lets check the partition count from ndb_desc:

From the below image we can see that table has been partition into 8.

Case -2:

We will try to partition the table with more than (20) the automatic partition count (16).

Lets check the partition count from ndb_desc:

From the above image we can see that table has been partition into 20. So from the above two cases, we can see that when explicitly partition is used, partition of table is completely based on the PARTITION number rather than the automatic way of partition. Now the obvious question is which one to choose i.e. the automatic way of partitioning or explicit way of partitioning? It is highly recommanded to use the automatic partition than the explicit way of partitioning in most of the cases.

This concludes the first part of table partitioning.

Comments

Post a Comment