MySQL NDB Cluster Backup & Restore In An Easy Way

In this post, we will see, how easily user can take NDB Cluster backup and then restore it. NDB cluster supports online backups, which are taken while transactions are modifying the data being backed up. In NDB Cluster, each backup captures all of the table content stored in the cluster.

In NDB Cluster, tables are horizontally partitioned into a set of partitions, which are then distributed across the data nodes in the cluster. The data nodes are logically grouped into nodegroups. All data nodes in a nodegroup (up to four) contain the same sets of partitions, kept in sync at all times. Different nodegroups contain different sets of partitions. At any time, each partition is logically owned by just one node in one nodegroup, which is responsible for including it in a backup.

When a backup starts, each data node scans the set of table partitions it owns, writing their records to its local disk. At the same time, a log of ongoing changes is also recorded. The scanning and logging are synchronised so that the backup is a snapshot at a single point in time. Data is distributed across all the data nodes, and the backup occurs in parallel across all nodes, so that all data in the cluster is captured. At the end of a backup, each data node has recorded a set of files (*.data, *.ctl, *.log), each containing a subset of cluster data.

During restore, each set of files will be restored [in parallel] to bring the cluster to the snapshot state. The CTL file is used to restore the schema, the DATA file is used to restore most of the data, and the LOG file is used to ensure snapshot consistency.

Let’s look at NDB Cluster backup and restore feature through an example:

To demonstrate this feature, let’s create a NDB Cluster with below environment.

NDB Cluster 8.0.22 version

Step 3:

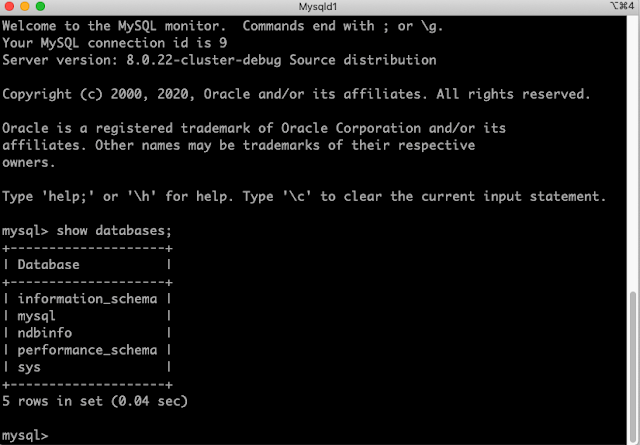

As cluster is up and running so let’s create a database, a table and do some transactions on it.

Let’s insert rows into table ‘t1’ either thru sql or thru any tools. Let’s continue the rows insertion thru sql to have a significant amount of datas in the cluster.

Let’s check the rows count from table ‘t1’. From the below image, we can see that table 't1' has ‘396120’ rows in it.

Step 4:

Now issue a backup command from the management client (bin/ndb_mgm) while some transactions on the table ‘t1’ was going on. We will delete rows from table ‘t1’ and issue a backup command in parallel.

While delete ops is going on, issue a backup command from the management client:

Let’s check the new row count from table ‘t1’ after all the delete ops finished. From the below image, we can see that now the table ‘t1’ has ‘306120’ rows.

Let’s look at the files backup created. As we have assigned a path to backup files so let’s discuss about these files in brief.

From the above image, we can see that, for each backup, one backup directory is created (BACKUP-backupid) and under each backup directory, 3 files are created. These are:

BACKUP-backupid-0.node_id.Data (BACKUP-1-0.1.Data):

The above file contains most of the data stored in the table fragments owned by this node. In the above example, 1 is the backupid, 0 is a hardcoded value for future use. 1 is node_id of the data node 1.

BACKUP-backupid.node_id.log (BACKUP-1.1.log):

This file contains all the row changes that happened to the tables while the backup was in progress. These logs will execute during restore either as roll forward or roll back depends on whether the backup is snapshot start or snapshot end.

Upon successfully completion of a backup, the output will looks like below:

From the above image, Node 1 is the master node who initiate the backup, node 254 is the management node on which the START BACKUP command was issued, and Backup 1 is the 1st backup taken. #LogRecords ‘30000’ indicate that while backup was in progress some transaction was also running on the same table. #Records shows the number of records captured across the cluster.

User can see the backup status also from the “cluster log” as shown below:

2021-01-12 15:00:04 [MgmtSrvr] INFO -- Node 1: Backup 1 started from node 254

2021-01-12 15:01:18 [MgmtSrvr] INFO -- Node 1: Backup 1 started from node 254 completed. StartGCP: 818 StopGCP: 855 #Records: 306967 #LogRecords: 30000 Data: 5950841732 bytes Log: 720032 bytes

So this concludes our NDB Cluster backup procedure.

From the above image, we can see that, database ‘test1’ is not present. Now let’s start our restore process from the backup image #1 (BACKUP-1).

Let’s start the restoration of meta data.

Meta data restore, disable index and data restore can execute at one go, or can be done in serial. This restore command can be issued from any data node or can be from a non-data node as well.

In this example, I am issuing meta data restore and disable index from Data Node 1 only for once. Upon successful completion, I will issue the data restore.

Data Node 1:

shell> bin/ndb_restore -n node_id -b backup_id -m --disable-indexes --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

-n: node id of the data node from where backup was taken. Do not confuse with the data node id of the new cluster.

-b: backup id (we have taken backup id as ‘1’)

-m: meta data restoration (recreate table/indexes)

--disable-indexes: disable restoration of indexes during restore of data

--ndb-connectstring (-c): Connection to the management nodes of the cluster.

--backup_path: path to the backup directory where backup files exist.

Let’s start the data restore on the Data Node 1.

User can take backup in the following states:

- When the cluster is live and fully operational

- When the cluster is live, but in a degraded state:

- Some data nodes are down

- Some data nodes are restarting

- During read and write transactions

Users can restore backups in the following cluster environments:

- Restore to the same physical cluster

- Restore into a different physical cluster

- Restore into a different configuration cluster i.e. backup taken from a 4 nodes cluster and restore into 8 data nodes cluster

- Restore into a different cluster version

Backups can be restored flexibly:

- Restore can be run locally or remotely w.r.t the data nodes

- Restore can be run in parallel across data nodes

- Can restore a partial set of the tables captured in the backup

Use cases of Backup & Restore:

- Disaster recovery - setting up a cluster from scratch

- Setup NDB Cluster asynchronous replication

- Recovery from user/DBA accidents like dropping of a table/database/schema changes etc

- During NDB Cluster software upgrade

Limitations:

- Schemas and table data for tables stored using the NDB Cluster engine are backed up

- Views, stored procedure, triggers and tables/schemas from other storage engine like Innodb are not backed up. Users need to use other MySQL backup tools like mysqldump/mysqlpump etc to capture these

- Support for only full backup. No incremental or partial backup supported.

NDB Cluster Backup & Restore concept in brief:

In NDB Cluster, tables are horizontally partitioned into a set of partitions, which are then distributed across the data nodes in the cluster. The data nodes are logically grouped into nodegroups. All data nodes in a nodegroup (up to four) contain the same sets of partitions, kept in sync at all times. Different nodegroups contain different sets of partitions. At any time, each partition is logically owned by just one node in one nodegroup, which is responsible for including it in a backup.

When a backup starts, each data node scans the set of table partitions it owns, writing their records to its local disk. At the same time, a log of ongoing changes is also recorded. The scanning and logging are synchronised so that the backup is a snapshot at a single point in time. Data is distributed across all the data nodes, and the backup occurs in parallel across all nodes, so that all data in the cluster is captured. At the end of a backup, each data node has recorded a set of files (*.data, *.ctl, *.log), each containing a subset of cluster data.

During restore, each set of files will be restored [in parallel] to bring the cluster to the snapshot state. The CTL file is used to restore the schema, the DATA file is used to restore most of the data, and the LOG file is used to ensure snapshot consistency.

Let’s look at NDB Cluster backup and restore feature through an example:

To demonstrate this feature, let’s create a NDB Cluster with below environment.

NDB Cluster 8.0.22 version

- 2 Management servers

- 4 Data nodes servers

- 2 Mysqld servers

- 6 API nodes

- NoOfReplicas = 2

Step 1:

Before we start the cluster, let’s modify the cluster config file (config.ini) for backup. When backup starts, it create 3 files (BACKUP-backupid.nodeid.Data, BACKUP-backupid.nodeid.ctl, BACKUP-backupid.nodeid.log) under a directory named BACKUP. By default, this directory BACKUP created under each data node data directory. It is advisable to create this BACKUP directory outside the data directory. This can be done by adding a config variable ‘BackupDataDir’ to cluster configuration file i.e. config.ini

In the below example, I have assigned a path to ‘BackupDataDir‘ in config.ini:

BackupDataDir=/export/home/saroj/mysql-tree/8.0.22/ndbd/node1/data4

Step 2:

Before we start the cluster, let’s modify the cluster config file (config.ini) for backup. When backup starts, it create 3 files (BACKUP-backupid.nodeid.Data, BACKUP-backupid.nodeid.ctl, BACKUP-backupid.nodeid.log) under a directory named BACKUP. By default, this directory BACKUP created under each data node data directory. It is advisable to create this BACKUP directory outside the data directory. This can be done by adding a config variable ‘BackupDataDir’ to cluster configuration file i.e. config.ini

In the below example, I have assigned a path to ‘BackupDataDir‘ in config.ini:

BackupDataDir=/export/home/saroj/mysql-tree/8.0.22/ndbd/node1/data4

Step 2:

Step 3:

As cluster is up and running so let’s create a database, a table and do some transactions on it.

Let’s insert rows into table ‘t1’ either thru sql or thru any tools. Let’s continue the rows insertion thru sql to have a significant amount of datas in the cluster.

Let’s check the rows count from table ‘t1’. From the below image, we can see that table 't1' has ‘396120’ rows in it.

Step 4:

Now issue a backup command from the management client (bin/ndb_mgm) while some transactions on the table ‘t1’ was going on. We will delete rows from table ‘t1’ and issue a backup command in parallel.

While delete ops is going on, issue a backup command from the management client:

Let’s check the new row count from table ‘t1’ after all the delete ops finished. From the below image, we can see that now the table ‘t1’ has ‘306120’ rows.

Let’s look at the files backup created. As we have assigned a path to backup files so let’s discuss about these files in brief.

From the above image, we can see that, for each backup, one backup directory is created (BACKUP-backupid) and under each backup directory, 3 files are created. These are:

BACKUP-backupid-0.node_id.Data (BACKUP-1-0.1.Data):

The above file contains most of the data stored in the table fragments owned by this node. In the above example, 1 is the backupid, 0 is a hardcoded value for future use. 1 is node_id of the data node 1.

BACKUP-backupid.node_id.ctl (BACKUP-1.1.ctl):

BACKUP-backupid.node_id.log (BACKUP-1.1.log):

This file contains all the row changes that happened to the tables while the backup was in progress. These logs will execute during restore either as roll forward or roll back depends on whether the backup is snapshot start or snapshot end.

Note:

User can restore from anywhere i.e. doesn’t have to be from any particular data node. ndb_restore is an NDB API client program, so can run anywhere that can access the cluster.

User can restore from anywhere i.e. doesn’t have to be from any particular data node. ndb_restore is an NDB API client program, so can run anywhere that can access the cluster.

Step 5:

Upon successfully completion of a backup, the output will looks like below:

From the above image, Node 1 is the master node who initiate the backup, node 254 is the management node on which the START BACKUP command was issued, and Backup 1 is the 1st backup taken. #LogRecords ‘30000’ indicate that while backup was in progress some transaction was also running on the same table. #Records shows the number of records captured across the cluster.

2021-01-12 15:00:04 [MgmtSrvr] INFO -- Node 1: Backup 1 started from node 254

2021-01-12 15:01:18 [MgmtSrvr] INFO -- Node 1: Backup 1 started from node 254 completed. StartGCP: 818 StopGCP: 855 #Records: 306967 #LogRecords: 30000 Data: 5950841732 bytes Log: 720032 bytes

So this concludes our NDB Cluster backup procedure.

Step 6:

We will now try to restore the data from the backup taken above. Let’s shutdown the cluster, cleanup all the files except the backup files and then start the cluster with initial (with no data).

Let’s restore the backup to a different cluster. From the below image, we can see that data node Id’s are different from the cluster where backup was taken.

Now let’s see if our database ‘test1’ is exist in the cluster or not after initial start.

We will now try to restore the data from the backup taken above. Let’s shutdown the cluster, cleanup all the files except the backup files and then start the cluster with initial (with no data).

Let’s restore the backup to a different cluster. From the below image, we can see that data node Id’s are different from the cluster where backup was taken.

Now let’s see if our database ‘test1’ is exist in the cluster or not after initial start.

From the above image, we can see that, database ‘test1’ is not present. Now let’s start our restore process from the backup image #1 (BACKUP-1).

The NDB restore works in this flow:

- It first restore the meta data from the *.ctl file so that all the tables/indexes can be recreated in the database.

- Then it restore the data files (*.Data) i.e. inserts all the records into the tables in the database.

- At the end, it executes all the transaction logs (*.log) rollback or roll forward to make the database consistent.

- Since restore will fail while restoring unique and foreign key constraints that are taken from the backup image so user must disable the index at the beginning and once restore is finished, again user need to rebuild the index.

Let’s start the restoration of meta data.

Meta data restore, disable index and data restore can execute at one go, or can be done in serial. This restore command can be issued from any data node or can be from a non-data node as well.

In this example, I am issuing meta data restore and disable index from Data Node 1 only for once. Upon successful completion, I will issue the data restore.

Data Node 1:

shell> bin/ndb_restore -n node_id -b backup_id -m --disable-indexes --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

-n: node id of the data node from where backup was taken. Do not confuse with the data node id of the new cluster.

-b: backup id (we have taken backup id as ‘1’)

-m: meta data restoration (recreate table/indexes)

--disable-indexes: disable restoration of indexes during restore of data

--ndb-connectstring (-c): Connection to the management nodes of the cluster.

--backup_path: path to the backup directory where backup files exist.

Let’s start the data restore on the Data Node 1.

Data Node 1:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Below, I am trying to capture the logs from the data restore run results as it started and then at the end.

From the above image, we can see that restore went successful. Restore skips restoration of system table data. System tables referred to here are tables used internally by NDB Cluster. Thus these tables should not be overwritten by the data from a backup. Backup data is restored in fragments, so whenever a fragment is found, ndb_restore checks if it belongs to a system table. If it does belong to a system table, ndb_restore decides to skip restoring it and prints a 'Skipping fragment' log message.

Let’s finish all the remaining data restore from the other data nodes. These data restore can be run in parallel to minimise the restore time. Here, we don’t have to pass -m, --disable-indexes again to restore command as we need to do it only once. With the first restore completion, it has already created tables, indexes etc so no need to recreate it again and will also fail. Once all the data are restored into the table(s), we will enable the indexes and constraints again using the –rebuild-indexes option. Note that rebuilding the indexes and constraints like this ensures that they are fully consistent when the restore completes.

Data Node 2:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Data Node 3:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Data Node 4:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Ndb restore (ndb_restore) is an API, it needs API slots to connect to cluster. Since we have initiated 3 ndb_restore programme in parallel from Data node ID 4, 5 and 6 so we can see from the below image that ndb_restore took API ID: 47, 48 and 49.

Let’s see the results from the remaining data nodes.

Since all the ndb_restore API finished successfully, we can see that the API ID that it had taken to connect the cluster has been released.

The last step is to rebuild the index. This can also done from any data nodes or from any non-data nodes but only once.

Data Node 1:

shell> bin/ndb_restore -n node_id -b backup_id --rebuild-indexes --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

--rebuild-indexes: It enables rebuilding of ordered indexes and foreign key constraints.

Step 8:

So we have finished our restoration steps. Let’s check the database, table, rows count in table etc ..

So database ‘test1’ is already created.

Now we can see that table ‘t1’ has been created and the row count#306120 which is also matching with our backup image (look at Step# 4).

So this concludes our NDB Cluster backup and restore feature. There are many more options user can pass to both backup (START BACKUP) and restore (ndb_restore) programme based on the requirements. In the above example, I have selected the basic minimum options user might need for backup and restore. For more information on these options, please refer to NDB Cluster reference manual here.

From the above image, we can see that restore went successful. Restore skips restoration of system table data. System tables referred to here are tables used internally by NDB Cluster. Thus these tables should not be overwritten by the data from a backup. Backup data is restored in fragments, so whenever a fragment is found, ndb_restore checks if it belongs to a system table. If it does belong to a system table, ndb_restore decides to skip restoring it and prints a 'Skipping fragment' log message.

Let’s finish all the remaining data restore from the other data nodes. These data restore can be run in parallel to minimise the restore time. Here, we don’t have to pass -m, --disable-indexes again to restore command as we need to do it only once. With the first restore completion, it has already created tables, indexes etc so no need to recreate it again and will also fail. Once all the data are restored into the table(s), we will enable the indexes and constraints again using the –rebuild-indexes option. Note that rebuilding the indexes and constraints like this ensures that they are fully consistent when the restore completes.

Data Node 2:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Data Node 3:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Data Node 4:

shell> bin/ndb_restore -n node_id -b backup_id -r --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

Ndb restore (ndb_restore) is an API, it needs API slots to connect to cluster. Since we have initiated 3 ndb_restore programme in parallel from Data node ID 4, 5 and 6 so we can see from the below image that ndb_restore took API ID: 47, 48 and 49.

Let’s see the results from the remaining data nodes.

Since all the ndb_restore API finished successfully, we can see that the API ID that it had taken to connect the cluster has been released.

The last step is to rebuild the index. This can also done from any data nodes or from any non-data nodes but only once.

Data Node 1:

shell> bin/ndb_restore -n node_id -b backup_id --rebuild-indexes --ndb-connectstring=cluster-test01:1186,cluster-test02:1186 –backup_path=/path/to/backup directory

--rebuild-indexes: It enables rebuilding of ordered indexes and foreign key constraints.

Step 8:

So we have finished our restoration steps. Let’s check the database, table, rows count in table etc ..

So database ‘test1’ is already created.

Now we can see that table ‘t1’ has been created and the row count#306120 which is also matching with our backup image (look at Step# 4).

So this concludes our NDB Cluster backup and restore feature. There are many more options user can pass to both backup (START BACKUP) and restore (ndb_restore) programme based on the requirements. In the above example, I have selected the basic minimum options user might need for backup and restore. For more information on these options, please refer to NDB Cluster reference manual here.

Appreciate your work keep it up

ReplyDeleteMysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download Now

Delete>>>>> Download Full

Mysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download LINK

>>>>> Download Now

Mysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download Full

>>>>> Download LINK 3E

hey! , i try to do restore and have this fail. Thanks!!

ReplyDelete[root@localhost mysql-cluster]# ndb_restore -n 2 -b 4 -m --disable-indexes --ndb-connectstring=192.168.0.39:1186 –backup_path=/var/lib/mysql-cluster/BACKUP/

Nodeid = 2

Backup Id = 4

backup path = –backup_path=/var/lib/mysql-cluster/BACKUP/

Opening file '–backup_path=/var/lib/mysql-cluster/BACKUP/BACKUP-4.2.ctl'

Failed to read –backup_path=/var/lib/mysql-cluster/BACKUP/BACKUP-4.2.ctl

NDBT_ProgramExit: 1 - Failed

This comment has been removed by a blog administrator.

ReplyDeleteSAP Grc training

ReplyDeleteSAP Secrity training

oracle sql plsql training

go langaunage training

azure training

java training

salesforce training

hadoop training

It's really awesome blog dear. i get lot of information. i also share some information. Hope you like it. Thanks for sharing it.

ReplyDeleteThis is really a good blog to read. It is so inspiring. Thanks for the amazing post to share and to gain knowledge.

ReplyDeleteNetwork Manaement System

Storage building management software

Server management application

Storage managemnet system

Service desk report

IT networking services

Backup Management Server

Security Management software

Excellent article, good concepts are delivered nice to read your article....

ReplyDeletehttps://patchlinks.com/fbackup-crack/

This comment has been removed by a blog administrator.

ReplyDeleteExcellent article, good concepts are delivered nice to read your article....

ReplyDeleteTop Crack Patch

ableton-live-crack

fbackup-crack

cleanmypc-crack

ReplyDeleteI am very impressed with your post because this post is very beneficial for me and provide a new knowledge…

incracks.com

restoro-crack

nitro-pro-crack

advanced-systemcare-pro-crack

Thank you sharing for your valuable content about mysql ndb cluster backup restore, is easy to understand and follow.

ReplyDeleteWe are offering 1-month free trial of backup on cloud and assuring the lowest price guarantee. Contact us: +91-9971329945

Please visit us our website:

web hosting

backup on cloud

best linux web hosting services

best windows hosting

android cloud backup solutions

A Nice post!

ReplyDeletehttps://topcracks.net/

Restoro crack

Panda Antivirus Pro crack

UnHackMe crack

Luminar crack

Panda Dome Premium crack

Wonderful Post!!! Thanks for sharing this great blog with us.

ReplyDeleteAndroid Course in Chennai

Android Online Course

Android Course in Coimbatore

Excellent Blog, I like your blog and It is very informative. Thank you

ReplyDeletePHP

Scripting Language

I appreciate your cooperation. Right on target I appreciate your help.Thank you so much for sharing all this wonderful info with the how-to's!!!! It is so appreciated!!! You always have good humor in your posts/blogs. So much fun and easy to read!

ReplyDeletecrack download

Output Portal Crack

UnHackMe Crack

4k Video Downloader Crack

FxSound Pro Crack

Thanks for this useful blog, keep sharing your thoughts...

ReplyDeleteUnix Program

Unix Applications

I guess I am the only one who comes here to share my very own experience guess what? I am using my laptop for almost the past 2 years.

ReplyDeleteFile Scavenger Crack

OpenShot Video Editor Crack

CadSoft Eagle Pro Crack

Ashampoo Burning Studio Crack

FBackup Crack

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThanks for sharing this informative post. Online cloud backup service agency offers Network and cyber security for businesses in ElizabethTown KY. Contact us today!

ReplyDeletenetwork security

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. wahabtech.net I hope to have many more entries or so from you.

Very interesting blog.

FBACKUP CRACK

PC Software Download

ReplyDeleteYou make it look very easy with your presentation, but I think this is important to Be something that I think I would never understand

It seems very complex and extremely broad to me. I look forward to your next post,

ACDSee Photo Studio Crack

Easypano Tourweaver Pro Crack

Easy Cut Studio Crack

Facebook Video Downloader Crack

PostgreSQL Maestro Crack

MediaMonkey Gold Crack

SuperAntiSpyware Professional Crack

Lucky Club Casino site (Updated) - Live Dealer

ReplyDeleteLucky Club Casino has over 80 카지노사이트luckclub casino games including slots, blackjack, roulette, video poker, keno and baccarat. There are a number of variations on each of the

My response on my own website. Appreciation is a wonderful thing...thanks for sharing keep it up. Ashampoo Backup Pro Crack

ReplyDeleteRemote Desktop Manager Crack

Nero Video Crack

IDrive Crack

Bitsum Process Lasso Pro Crack

IDM Crack

DiskBossis Crack

He?lo to every one, it’s really a fastid?ou? for me to go to ?ee th?s website, it include? important Information.

ReplyDeleteSerial Key Download

Very Nice Blog this amazing Software.

ReplyDeleteThank for sharing Good Luck!

Ashampoo Backup Pro Crack

HandBrake CS2 Crack

MacKeeper Crack

NCH Express Zip Crack

TeamViewer Crack

HandBrake CS2 Crack

WavePad Sound Editor Crack

Adobe Lightroom Crack

This comment has been removed by a blog administrator.

ReplyDeleteI am happy after visited this site. It contains valuable data for the guests. Much thanks to you!

ReplyDeletePC Software Download

IVT BlueSoleil Crack

Betternet VPN Premium Crack

iCare Data Recovery Pro Crack

Easy Cut Studio Crack

StudioLine Web Designer Crack

Xara Web Designer Premium Crack

Best Softwares

ReplyDeleteEasy Cut Studio Crack

Betternet VPN Premium

Wondershare Recoverit Crack

It is the best website for all of us. It provides all types of software and apps which we need. You can visit this website.

ReplyDeletefbackup-crack

kurulus-osman-crack

corel-videostudio-crack

I am happy after visited this site. It contains valuable data for the guests. Much thanks to you!

ReplyDeleteCrack Download

File Scavenger Crack

IObit Malware Fighter Crack Mac

Pianoteq Crack

Garena Free Fire Crack

Mindjet Mindmanager Crack

Such a Nice post. Thanks for Awesome tips Keep it up

ReplyDeletePostgreSQL Maestro Crack

Beyond Compare Crack

MacBooster Crack

RemoveWAT Crack

Clip Studio Paint EX Crack

Dragon Naturally Speaking crack

AnyToISO Professional Crack

Origin Pro Crack

WinToUSB Enterprise Crack

Astroburn Pro Crack

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteAmazing and Creative blogs that you see.. Its latest version indormation......'

ReplyDeleteGolden Software Surfer Crack

Little Alterboy Crack

MemTest86 Pro Crack

RecordPad Sound Recorder crack

WinX HD Video Converter Deluxe Crack

WinZip Disk Tools Crack

ThunderSoft GIF Converter Crack

Secure Eraser Professional Crack

MediaHuman YouTube Downloader Crack

EaseUS MobiMover Pro Crack

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. prosvst.com I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

Backup4all Professional Crack

ReplyDeleteAmazing blog! I really like the way you explained such information about this post with us. And blog is really helpful for us this website

nordvpn-crack

ashampoo-pdf-pro-crack

backup4all-pro-crack

proshow-producer-crack

drm-converter-crack

4k-video-downloader-crack

xyplorer-pro-crack

Thanks For Sharing...Such a nice post...

ReplyDeleteNIUBI Partition Editor Crack

Betternet VPN Premium Crack

Movavi Slideshow Maker Crack

HMA Pro VPN Crack

I really like your content. Your post is really informative. I have learned a lot from your article and I’m looking forward to applying it in my article given below!.

ReplyDeleteRestoro Crack

EaseUS Partition Master Crack

Text Scanner OCR Mod APK Crack

DeepL Pro Crack

Reimage Pc Repair Crack

CreateStudio Crack

I am very happy to read this article. Thanks for giving us Amazing info. Fantastic post.

ReplyDeleteThanks For Sharing such an informative article, Im taking your feed also, Thanks.adobe-indesign-cc-crack/

I really like your content. Your post is really informative. I have learned a lot from your article and I’m looking forward to applying it in my article given below!.

ReplyDeleteRestoro Crack

EaseUS Partition Master Crack

Text Scanner OCR Mod APK Crack

DeepL Pro Crack

Reimage Pc Repair Crack

CreateStudio Crack

The Foundry Nuke Studio crack

DeepL Pro Crack

Blue Iris Crack

IZotope RX 8 Audio Editor Advanced Crack

This comment has been removed by a blog administrator.

ReplyDeleteMy response on my own website. Appreciation is a wonderful thing...thanks for sharing keep it up. Apowersoft Video Editor Pro Crack

ReplyDeleteNero BackItUp Crack

Bigasoft Video Downloader Pro Crack

WinZip Pro Crack

Adobe XD Crack

ReplyDeleteIZotope RX 8 Audio Editor Advanced Crack is a full offline installer standalone setup of IZotope RX 8 Audio Editor Advanced 8.1.0 Free Download for compatible version of windows. iZotope RX Audio Editor Advanced is a pop application

IZotope RX 8 Audio Editor Advanced Crack

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. suripc.com I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

iDrive Crack

This comment has been removed by a blog administrator.

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. suripc.com I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

File Scavenger Crack

I like your all post. You have given me all information.Appreciation is a wonderful thing...thanks for sharing keep it up.

ReplyDeleteAshampoo PDF Pro Crack

AVG PC TuneUp Crack

EaseUS PDF Editor Crack

Glary Utilities Pro Crack

Abelssoft StartupStar Crack

ReplyDeleteI was looking for this information from enough time and now I reached your website it’s really good content.

Thanks for writing such a nice content for us.

2020/09/16/how-to-activate-windows-10

This comment has been removed by a blog administrator.

ReplyDeleteI am very happy to read this article. Thanks for giving us Amazing info. Fantastic post.

ReplyDeleteThanks For Sharing such an informative article, Im taking your feed also, Thanks.synthesia-crack-free-download/

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. crackbay.org I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

RollBack Rx Pro Crack

“Thank you so much for sharing all this wonderful info with the how-to's!!!! It is so appreciated!!!” “You always have good humor in your posts/blogs. So much fun and easy to read!

ReplyDeleteIris Pro Crack

HD Tune Pro Crack

CuteFTP Crack

Roland Cloud Crack

BlueStacks App Player Crack

Driver Navigator Crack

I am very happy to read this article. Thanks for giving us Amazing info. Fantastic post.

ReplyDeleteThanks For Sharing such an informative article, Im taking your feed also, Thanks.

pixarra-twistedbrush-pro-studio-crack/

ReplyDeleteGreat set of tips from the master himself. Excellent ideas

VSDC Video Editor Pro Crack

I was looking for this information from enough time and now I reached your website it’s really good content.

ReplyDeleteThanks for writing such a nice content for us.

2021/03/28/designdoll-crack-mac

It solved all my queries perfectly. Our HP Printer offline service is also offered to get your printer offline.

ReplyDeleteOur HP Printer offline service is also offered to get your printer offline.

flaming-pear-flood-crack

This comment has been removed by a blog administrator.

ReplyDeleteAmazing blog! I really like the way you explained such information about this post with us. And blog is really helpful for us this website

ReplyDeleteThunderSoft GIF Maker Crack

Xmanager Power Suite Crack

CudaText Crack

AnyMP4 Video Converter Crack

Malwarebytes Anti-Exploit Crack

ascrack.org

This comment has been removed by a blog administrator.

ReplyDeleteAll of your efforts are much appreciated, and I appreciate you sharing them with us.

ReplyDeleteCudaText Crack

This comment has been removed by a blog administrator.

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot.

ReplyDeleteFBackup Crack

HandBrake CS2 Crack

MacKeeper Crack

NCH Express Zip Crack

TeamViewer Crack

HandBrake CS2 Crack

WavePad Sound Editor Crack

Adobe Lightroom Crack

I am very happy to read this article. Thanks for giving us Amazing info. Fantastic post.

ReplyDeleteThanks For Sharing such an informative article, Im taking your feed also, Thanks.

serviio-pro-crack/

This comment has been removed by a blog administrator.

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

Crackplus.org

Wondershare PDFelement Pro Crack

360 Total Security Crack

GoodSync Crack

Adobe Acrobat Pro DC Crack

Redshift Render Crack

XYplorer Crack

iBoysoft Data Recovery Crack

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot.

ReplyDeleteLittle AlterBoy Crack

HandBrake CS2 Crack

MacKeeper Crack

NCH Express Zip Crack

TeamViewer Crack

HandBrake CS2 Crack

WavePad Sound Editor Crack

Adobe Lightroom Crack

Clip Studio Paint EX 1.11.9 Crack is a great tool for writing manga, pictures. In addition, CLIP STUDIO PAINT EX Crack is a very useful tool for working with all types of images, including manga, comics, cartoons, paintings, and more. The system offers natural color schemes and tools, improved appearance, and unsurpassed accuracy.

ReplyDeletehttps://patchlinks.com/clip-studio-paint-ex-crack/

Malwarebytes Crack Download is a great tool to remove malware and spyware from your system. It provides full protection and protects against attack by invaders. Provides the ability to remove malware that antivirus cannot detect. It can also remove malware and spyware from your system. This is usually an antivirus, but there is a reason for it to be detected.

ReplyDeletehttps://patchlinks.com/malwarebyte-crack/

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteMemTest86 Pro Crack v9.4 Build 1000 seems to have strong memory with several test instruments that monitor the operation of the storage machine.http://9xcrack.com/-crack/

ReplyDeleteHello Dear, I love your site. Many thanks for the shared this informative and interesting post with us.

ReplyDeleteTyping Master Pro

Wow, amazing block structure! How long

ReplyDeleteHave you written a blog before? Working on a blog seems easy.

The overview of your website is pretty good, not to mention what it does.

In the content!

vstpatch.net

MP3Tag Pro Crack

EmEditor Professional Crack

Process Lasso Pro Crack

Adobe Acrobat Pro DC Crack

Bitwig Studio Crack

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. Free4links.com I hope to have many more entries or so from you.

Very interesting blog.

Ashampoo Backup Pro Crack

ReplyDeleteSo nice I am enjoying for that post as for u latest version of this Security tool Available

postgresql-maestro-crack

vce-exam-simulator-pro-crack

auslogics-registry-cleaner-crack

pdfmate-pdf-converter-professional-crack

truecaller-premium-crack

panoramastudio-pro-crack

adobe-xd-cc-crack

proshow-producer-crack

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

seriallink.org

Movavi Screen Recorder Crack

EaseUS PDF Editor Crack

Zemax Opticstudio Crack

HitmanPro Crack

Mysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download Now

ReplyDelete>>>>> Download Full

Mysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download LINK

>>>>> Download Now

Mysql Ndb Cluster Backup And Restore In An Easy Way >>>>> Download Full

>>>>> Download LINK

We do not charge any upfront costs, and you will not be charged anything if it turns out that you are not eligible for Qatar Airways flight cancellation compensation. However, if we are successful and you receive compensation, we will only keep €25 + 25% of the compensation amount and transfer the balance to you.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis site have particular software articles which emits an impression of being a significant and significant for you individual, able software installation.This is the spot you can get helps for any software installation, usage and cracked.

ReplyDeletefbackup-crack

drive-snapshot-serial-key

sidify-music-converter-crack

windows-10-pro-activator

hma-pro-vpn-crack/

I love this site, it is a new theory, I read it, gives Good knowledge

ReplyDeleteRestoro Crack

Norton Security and Antivirus Crack

I'm really impressed with your writing skills, as smart as the structure of your weblog.

ReplyDeleteMAGIX Video Pro Crack

Movavi Video Editor Plus Crack

Adobe InCopy Crack

UVK Ultra Virus Killer Crack

CudaText Crack

ascrack.org

This comment has been removed by a blog administrator.

ReplyDelete

ReplyDeleteGstarCAD Crack

Hi Dear, I like your post style as it’s unique from the others. I’m seeing on the page.

ReplyDeletemovavi video editor

ReplyDeleteSo nice I am enjoying for that post as for u latest version of this Security tool Available

postgresql-maestro-crack

ReplyDeleteReally Appreciable Article, Honestly Said The Thing Actually I liked The most is the step-by-step explanation of everything needed to be known for a blogger or webmaster to comment, I am going show this to my other blogger friends too.

postgresql-maestro-crack

ReplyDeleteI am very happy to read this article. Thanks for giving us Amazing info. Fantastic post.

Thanks For Sharing such an informative article, Im taking your feed also, Thanks.visual-assist-x-crack/

I Like your articale very Nice post palez chaek my articale... fbackup<>

ReplyDeleteAmazing blog! I really like the way you explained such information about this post to us. And a blog is really helpful for us this website.

ReplyDeleteTenorshare iCareFone Crack

IDrive Crack

PureVPN Crack

TeamViewer Crack

TunesKit Spotify Music Converter Crack

Nero Burning ROM Crack

downloadpc.co

This comment has been removed by a blog administrator.

ReplyDelete

ReplyDeleteVery good article! We will be linking to this particularly great post on our website. Keep up the good writing.

paywindow-payroll-system-crack

windows-11-crack

window-12-pro-crack

rewasd-crack-plus-serial-key

poweriso-crack

router-scan-crack-latest

nexus-keygen-crack-latest

If you've forgotten your Google Account password, you can go Quickfixnumbr.com and try to do Passwords.google.com account recovery

ReplyDeleteDownload Software for PC & Mac

ReplyDeleteYou make it look very easy with your presentation, but I think this is important to Be something that I think I would never understand

It seems very complex and extremely broad to me. I look forward to your next post,

Twixtor Pro Crack

Iboysoft Data Recovery Crack

WiFi Password Recovery Crack

YouTube Movie Maker Crack

PassFab iPhone Unlocker Crack

SpyNote Crack

Greetings! This website was recommended to me by one of my Facebook groups.

ReplyDeleteAt first sight, my first option appears to be beneficial software.

In summary, based on user input, personal information can be downloaded and stored.

IBeesoft Data Recovery Crack

online backup statistics

ReplyDeleteOnline Daily Backup software helps you to create copies of files, database, and hard drive that prevents your data loss. Click here for more information about Online Cloud Backup Reseller Program.

Hey ben this side,

ReplyDeleteYour information is quite informative and has greatly aided me. You said exactly what a traveller or any person needs to know because when they travel for the first time abroad, they need to know about the location, surroundings, where they would stay, and many other things. You emphasise these types of things in your post, making it that much more useful to travellers. I also learned a lot from your article.We're on the road again after this pandemic.

thanks to lufthansa manage booking

Thanks a lot for your kind information.

Learn to reset Google Authenticator to restore your AAX account in case of a lost or stolen mobile device.

ReplyDeletePinterest allows you to change your profile information whenever you want. To keep your account safe and secure, you need to regularly update or reset Pinterest password.

ReplyDelete

ReplyDeleteGreat set of tips from the master himself. Excellent ideas. Thanks for Awesome tips Keep it up

anvsoft-syncios-data-recovery-crack

driver-magician-crack

apower-mirror-crack

adobe-photoshop-lightroom-cc-crack

cadsoft-eagle-pro-crack

To reset roku without remote is certainly one of the most difficult problems a Roku owner faces.

ReplyDeleteEach roku streaming player comes with a convenient WiFi enabled remote that allows you to watch the latest blockbusters and most-anticipated programs on your TV screen, but missing or destroying your controller will lead to extreme headaches to Reset Roku TV without Remotefollow the provided guide.

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteWow, amazing block structure! How long

ReplyDeleteHave you written a blog before? Working on a blog seems easy.

The overview of your website is pretty good, not to mention what it does.

In the content!

SuperCopier Crack

AnyDesk Crack

Macdrive Pro Crack

Alien Skin Blow Up Crack

Adobe Acrobat Pro DC Crack

This comment has been removed by a blog administrator.

ReplyDeleteI guess I am the only one who came here to share my very own experience. Guess what!? I am using my laptop for almost the past 4 years, but I had no idea of solving some basic issues. I do not know how to Latest Software Crack Free Download With Activation Key | Serial Key | Keygen | Torrent But thankfully, I recently visited a website named Crack software free download

ReplyDeleteIris Pro Crack

CorelDraw X9 Crack

Driver Easy Pro Crack

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteI like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot.

ReplyDeleteRouter Scan Crack

Appsforlife Barcode Crack

Hitman Pro Crack

Such a Nice post. Thanks for Awesome tips Keep it up

ReplyDeletePostgreSQL Maestro Crack

AmiBroker Crack

Topaz A I Gigapixel Crack

REAPER Crack

ProPresenter Crack

Advanced SystemCare Pro Crack

Intuit TurboTax All Editions Crack

Voicemod Pro Crack

Switch Audio File Converter Crack

EaseUS Video Editor Crack

This comment has been removed by a blog administrator.

ReplyDeletewindows-10-product-key-free

ReplyDeletewindows-11-product-key-generator

crackus.org

Nice post! This is a very nice blog that I will definitively come back to more times this year! Thanks for the informative post.download Nero BackItUp

ReplyDeleteFantastic internet site! I like how you were able to provide so much information on this subject in such a clear and concise manner to us.

ReplyDeleteIn addition, the blog on this page is really useful to us.,,,

iBeesoft Data Recovery Crack

I'm really impressed with your writing skills, as smart as the structure of your

ReplyDeleteLatest Software Free Download

weblog. Is this a paid topic

Diskdigger crack

do you change it yourself? However, stopping by with great quality writing, it's hard to see any good blog today.

Program4pc audio convertercrack

Diskdigger-crack

Reimage pc reapir crack

Drive snapshot pro crack

I like your all post. You have done really good work. Thank you for the information you provide, it helped me a lot. I hope to have many more entries or so from you.

ReplyDeleteVery interesting blog.

softwarezpro.info

Goversoft Privazer Donors Crack

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteI really like your content. Your post is really informative. I have learned a lot from your article and I’m looking forward to applying it in my article given below!.

ReplyDeleteRestoro Crack

EaseUS Partition Master Crack

Text Scanner OCR Mod APK Crack

DeepL Pro Crack

Reimage Pc Repair Crack

CreateStudio Crack

The Foundry Nuke Studio crack

IDM Crack

This comment has been removed by a blog administrator.

ReplyDeleteI like to read your blog. You shared a wonderful information.

ReplyDeleteMysql DBA Training

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete